A project supported by FAPESP's Innovative Research in Small Business program aims to make experimental technology more affordable and bring it to market

A project supported by FAPESP's Innovative Research in Small Business program aims to make experimental technology more affordable and bring it to market.

A project supported by FAPESP's Innovative Research in Small Business program aims to make experimental technology more affordable and bring it to market.

A project supported by FAPESP's Innovative Research in Small Business program aims to make experimental technology more affordable and bring it to market

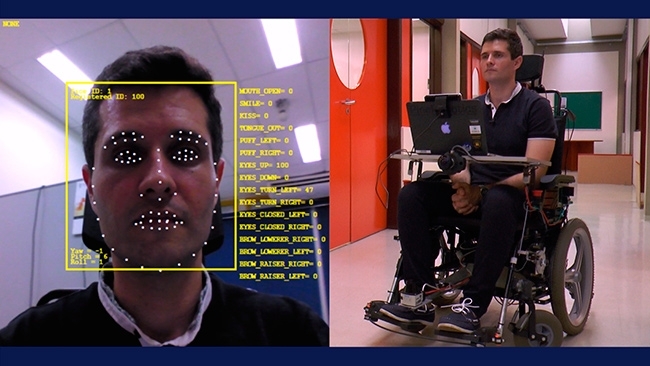

By Karina Toledo | Agência FAPESP – A wheelchair that can be controlled by small movements of the face, head or iris has been developed by researchers at the University of Campinas’s School of Electrical & Computer Engineering (FEEC-UNICAMP) in São Paulo State, Brazil.

The wheelchair is still considered experimental and expensive, but a project recently approved by FAPESP’s Innovative Research in Small Business (PIPE) program aims to adapt the technology to make it more affordable and marketable in Brazil within two years.

“We want to make sure the end-product costs no more than twice as much as an ordinary motorized wheelchair, the kind controlled by a joystick, which currently costs about R$7,000,” [now about US$2,000] said Eleri Cardozo, a professor at FEEC-UNICAMP. Cardozo presented the results of his group’s research during the 3rd BRAINN Congress, which took place in Campinas on April 11-13, 2016.

According to Cardozo, the technology can be used by people with tetraplegia, stroke victims and patients with amyotrophic lateral sclerosis or other conditions that make fine hand movements impossible.

The research began in 2011 and was initially supported by FINEP, the Brazilian Innovation Agency. Currently, it is ongoing under the aegis of the Brazilian Research Institute for Neuroscience and Neurotechnology (BRAINN), one of the Research, Innovation and Dissemination Centers (RIDCs) funded by FAPESP.

“Our group was studying brain-computer interfaces, or BCIs, and we thought it would be interesting to evaluate the technology in a real-life situation,” Cardozo recalled. BCIs involve signal acquisition and processing methods that permit communication between the brain and an external device.

The group then purchased a conventional motorized wheelchair, removed the joystick and fitted the wheelchair with several devices typically found in robots, such as sensors capable of measuring the distance to a wall or detecting a sloping floor.

The prototype was also equipped with a notebook computer that sent commands directly to the wheelchair and a 3D camera with Intel’s RealSense technology, which enables the user to interact with a computer by means of facial expressions or body movements.

“The camera identifies more than 70 points on the face – around the mouth, nose and eyes – and simple commands can be extracted from movements at these points, such as forward, back, left or right, and, most importantly, stop,” Cardozo said.

The user can also interact with the computer by voice command, but this technology is considered less reliable than facial expressions because of differences in vocal pitch or timbre, as well as possible interference by background noise.

“These limitations can be worked around by defining a small number of commands,” Cardozo said. “In addition, the software includes a training function that optimizes the identification of commands for a specific user.”

Thinking of patients with especially severe tetraplegia, who cannot use facial expressions, the group is also working on a BCI device capable of extracting signals directly from the brain via external electrodes and converting them into commands. They have not yet found a way to integrate the device into the robotized wheelchair.

“We’ve performed demonstrations in which a person sitting at a desk near the wheelchair user wore a helmet with electrodes and controlled the wheelchair,” Cardozo said. “Before fitting the BCI device to the wheelchair, we have to address a few issues, such as power supply. The cost of the technology is still very high, but a new low-cost generation with a 3D-printable helmet is in the pipeline.”

A Wi-Fi antenna has also been fitted to the wheelchair to enable a carer to steer it remotely via the internet. “These interfaces require a potentially tiring degree of concentration on the user’s part, and we’ve built in this antenna so that another person can assume control of the wheelchair at any time,” Cardozo explained.

Startup

The interface based on facial expression capture and processing is the most promising of all the methodologies tested so far by the team at UNICAMP. For this reason, the interface will be the focus of the project developed under the aegis of PIPE, with Paulo Gurgel Pinheiro as the principal investigator.

A startup called HOO.BOX Robotics has been established. “It’s a spinoff from my postdoctoral research, which set out to develop wheelchair steering interfaces requiring the least possible effort from the user,” Pinheiro said. “Initially, we tested sensors capable of capturing contractions of the facial muscles. We then upgraded the device to use imaging technology that captures facial expressions without the need for sensors.”

Instead of creating a robotized wheelchair like UNICAMP’s prototype, Pinheiro’s group decided to prioritize low cost. The project therefore focuses on the development of software and a mechanical clamp or claw attached to the joystick so that it can be used with any motorized wheelchair already on the market.

“Our idea is that the user will be able to download the facial expression processing software to their notebook, which will be connected to the ‘mini-claw’ via a USB port,” Pinheiro explained. “The user will ‘teach’ the software to recognize specific facial movements such as a kiss, half-smile, wrinkled nose, puffed-out cheeks or raised eyebrows, and assign each of these actions to driving the wheelchair forward or backward, turning left or right, and stopping it. The software sends the command to the claw, which moves the joystick. This solution doesn’t require any adaptation of the wheelchair’s structure and hence avoids voiding the warranty.”

He expects a prototype of the system, known as Wheelie, to be ready by early 2017. Two challenges will have to be surmounted in this period: improving the classification of facial expressions so that signal interpretation is not impaired by differences in ambient lighting and ensuring that only the wheelchair user’s facial expressions are captured when other people are around.

“In the future, the technology will also be useful as a rehabilitation aid for people who have had a stroke or some other kind of brain injury, as they’ll be motivated to keep up their treatment by seeing how much progress they’re making with movement,” Pinheiro said.

Republish

The Agency FAPESP licenses news via Creative Commons (CC-BY-NC-ND) so that they can be republished free of charge and in a simple way by other digital or printed vehicles. Agência FAPESP must be credited as the source of the content being republished and the name of the reporter (if any) must be attributed. Using the HMTL button below allows compliance with these rules, detailed in Digital Republishing Policy FAPESP.