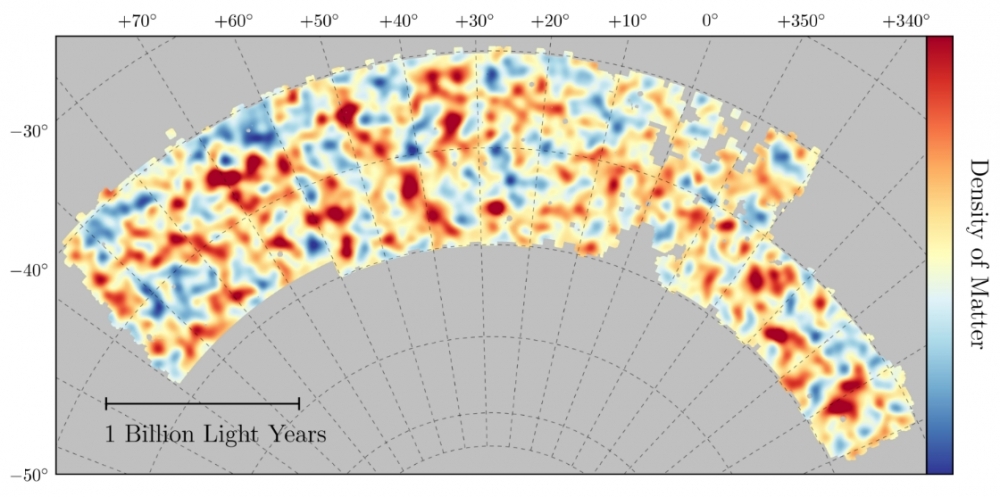

The map produced by the Dark Energy Survey covers about 1/30th of the sky and shows the distribution of dark matter in this region. Concentrations of dark matter are above average in areas shaded red, below average in areas shaded blue (image: Dark Energy Survey)

The map produced by the Dark Energy Survey covers about 1/30th of the sky and shows the distribution of dark matter in this region.

The map produced by the Dark Energy Survey covers about 1/30th of the sky and shows the distribution of dark matter in this region.

The map produced by the Dark Energy Survey covers about 1/30th of the sky and shows the distribution of dark matter in this region. Concentrations of dark matter are above average in areas shaded red, below average in areas shaded blue (image: Dark Energy Survey)

By José Tadeu Arantes | Agência FAPESP – Known matter (called “baryonic”) corresponds to only 5% of the contents of the Universe. Another 25% is unknown dark matter, and 70% is dark energy, even more enigmatic. These percentages, rounded here, were established in previous research and have now been confirmed with noteworthy numerical convergence by the Dark Energy Survey (DES).

DES is an international collaborative effort to map the Universe on a huge scale, carrying out a deep, wide-area sweep of about one-eighth of the sky (5,000 square degrees) and gathering data on more than 300 million galaxies, 100,000 galaxy clusters and 2,000 supernovae, as well as millions of stars in the Milky Way and objects in the Solar System.

Activities began in 2013 and are scheduled to continue until 2018. Systematized information from the first year has just been published. The data enabled the production of a map with 26 million galaxies, covering approximately 1/30th of the sky and showing the heterogeneous distribution of dark matter in a swath billions of light-years in length.

Another highlight of the material released by the collaboration is that the systematized data for Year 1 are consistent with the simplest interpretation of the nature of dark energy. According to this interpretation, the mysterious ingredient that is accelerating instead of slowing the expansion of the Universe is vacuum energy. Moreover, it corresponds to the cosmological constant (Λ), initially proposed and later abandoned by Einstein. The model is that the total quantity of this energy increases as it expands, but its density remains constant in space and time.

Led by the Fermi National Accelerator Laboratory (Fermilab) in the United States, DES involves over 400 scientists from several countries, including a number of Brazilians. The DES–Brazil consortium is coordinated by the Interinstitutional e-Astronomy Laboratory (LIneA), headquartered in Rio de Janeiro. LIneA runs a DES portal and also coordinates Brazil’s participation in the Large Synoptic Survey Telescope (LSST), which will replace DES and is under construction in Chile.

In São Paulo State, the research is led by Rogério Rosenfeld, ex-director of São Paulo State University’s Theoretical Physics Institute (IFT-UNESP) and vice coordinator of the National Institute of Science and Technology (INCT) on the e-Universe; Marcos Lima, a professor at the University of São Paulo’s Physics Institute (IF-USP); and Flavia Sobreira, a professor at the University of Campinas’s Gleb Wataghin Institute of Physics (IFGW-UNICAMP). All three are supported by FAPESP.

“The region mapped by DES in its first year of activity is the largest contiguous area of the sky ever observed in a single survey,” Rosenfeld told Agência FAPESP. “This involved the use of a very high-resolution 570 megapixel digital camera, DECam, short for Dark Energy Camera, installed in the focal plane of the four-meter-diameter telescope at the Cerro Tololo Inter-American Observatory in the Chilean Andes.

“The millions of galaxies were photographed using five different filters, each of which images the sky in a different band of the visible spectrum, defined by a specific wavelength interval. In this way, it was possible to estimate their redshifts.”

The term redshift is worth explaining. As discovered in 1929 by American astronomer Edwin Hubble (1889-1953), the Universe is expanding, all galaxies are moving away from each other, and the farther a galaxy is from Earth, the faster it is receding from us. As galaxies move away, the light they emit increases in wavelength and hence shifts to the red end of the spectrum. Redshift increases with distance owing to the Doppler effect.

To calculate the distance to an observed object, astronomers use spectroscopy, a technique that breaks down the light emitted by objects into all wavelengths in the spectrum in order to measure redshift very precisely. Because DES images can encompass hundreds of millions of galaxies, however, this technique is impracticable, so scientists working on the survey use photometry instead. It is not as accurate, but it measures light intensity under different filters, and the loss of quality is more than offset by the quantity of data.

“In addition to measuring the distance to a galaxy based on redshift, photometry also tells us its shape,” Rosenfeld said. “This is most important. One of the effects of matter in the Universe is the creation of gravitational lenses, which slightly distort the light received from distant sources. Photometry therefore enables DES to survey both galaxy distribution and the shape distortion produced by so-called ‘weak gravitational lensing’, so that it’s possible to map all the matter existing in the space between light sources and us – including dark matter, which is far more abundant.”

Unlike "strong gravitational lensing", which produces multiple images of the same object, and even arcs or rings, weak gravitational lensing produces very subtle distortions, on the order of 1%, in the positions and shapes of observed galaxies. It is these distortions that enable DES to build an extremely precise map of the distribution of dark matter.

“We register the shapes of the galaxies in the catalogue and measure the distortions in them produced by the gravitational field existing in the space traversed by the light these objects emit. Because gravitational fields are caused by matter, this procedure enables us to map the distribution of matter. We then subtract the luminous matter to find the distribution of dark matter in the region concerned,” Sobreira said.

Cosmic evolution

To help understand dark matter’s gravitational contribution to the structure of the Universe, it is worth briefly recalling the most widely accepted model of cosmic evolution.

According to this model, the infant Universe was extremely hot and dense. As it expanded, temperature and density decreased. Dark matter interacts very weakly and therefore soon stopped exchanging energy with other components (or “decoupled”, as physicists prefer to say), while the rest of its contents remained coupled in a hot plasma of baryons (which constitute known matter) and photons (which constitute electromagnetic radiation). Coupling proceeded until expansion made the distance between plasma components large enough for photons to stop interacting with baryonic matter so that they were free to travel independently through space.

This second decoupling, known as “recombination”, is believed to have taken place approximately 400,000 years after the Big Bang. Released from the pressure applied by photons, baryonic matter began clustering around the regions with the greatest gravitational potential, meaning those with the highest density of dark matter. Thus dark matter acted as a “gravitational attractor” for the first structures to emerge.

Decoupled photons now comprise background cosmic radiation, observed in the microwave band, while baryonic matter formed gas clouds, stars, planets and galaxies. Observation of background cosmic radiation by NASA’s COBE mission (1989-93) and WMAP mission (2001-10), as well as ESA’s Planck mission (2009-13), provided a “snapshot” of the Universe when it was very young, only 400,000 years old. The ongoing observation of galaxy distribution by DES captures the state of affairs billions of years later. Surprisingly, these two “snapshots” are entirely compatible. “Combined with other observations, the datasets supplied by the two maps, which record different objects, enable us to reach the same numbers,” Sobreira said.

The nature of dark matter is still moot. “There’s almost a consensus in the scientific community that it’s a different kind of elementary particle that has no electrical charge and hence doesn’t interact with electromagnetic radiation,” Rosenfeld said. “Because it doesn’t interact with light, it would be more correct to call it ‘transparent’ instead of dark. DES works with the hypothesis that dark matter consists of ‘cold particles’. The world ‘cold’ in this case means only that these particles move at non-relativistic velocities.” Relativistic velocities are velocities that approach the speed of light.

The percentage of dark matter can be determined quite precisely using the recently released systematized data from DES Year 1, but its nature cannot. “The reason is that the nature of dark matter is manifested in the intensity of its self-interactions and the mass of its particles, so it has effects on smaller scales. It’s extremely complicated to reach a conclusive result via a large-scale study,” Lima said.

Although the results are still partial, the Y1 data have already served to determine the quantity of dark energy very precisely. The calculation was based on the distribution of the closest galaxies on the map and the lensing effect they have on distant galaxies. The greater the percentage of dark energy, the faster the expansion and hence the smaller the number of structures formed, since structures are formed by gravitational attraction and the fast expansion driven by dark energy counteracts the gravitational attraction that causes this formation. “Confirmation that dark energy accounts for 70% of the contents of the Universe was made possible by linking the number of structures measured by DES to previous measurements performed by other experiments,” Lima said.

“The next step is expected to involve linking them to another property of dark energy, which is its equation of state, defined as the ratio of its pressure to its density. With precise measurements of both the density of dark energy and its equation of state, it will be possible to infer the nature of this component.”

Lima noted that datasets on supernovae and galaxy clusters are still being analyzed, which promises important news on dark energy soon, so that it should be possible to compute almost directly the rate at which the Universe is expanding. Recall that in the late 1990s the Supernova Cosmology Project and the High-z Supernova Search Team discovered that the expansion rate was accelerating, using supernovae as markers. Americans Saul Perlmutter and Adam Riess, jointly with Australian Brian Schmidt, won the 2011 Nobel Prize in Physics for the discovery.

Where may all this lead? “Dark energy isn’t the only possible explanation for the acceleration in the rate of expansion of the Universe,” Lima said. “Another possibility is that we have to change our understanding of gravitation. Just as Einstein generalized Newton’s theory of gravitation, the debate now centers on whether we need to generalize Einstein’s theory by going beyond general relativity toward so-called ‘modified theories of gravity’. These two explanations, dark energy and modified gravity, make very different predictions about how the Universe is expanding, and above all about how disturbances grow in this expanding Universe. The data released so far is totally consistent with the interpretation of dark energy as the cosmological constant, which is the simplest model of all, with an equation of state exactly equal to (-1). But other possibilities aren’t to be ruled out.”

Republish

The Agency FAPESP licenses news via Creative Commons (CC-BY-NC-ND) so that they can be republished free of charge and in a simple way by other digital or printed vehicles. Agência FAPESP must be credited as the source of the content being republished and the name of the reporter (if any) must be attributed. Using the HMTL button below allows compliance with these rules, detailed in Digital Republishing Policy FAPESP.